Machine learning has seen a spectacular boom in recent years. There is much talk about the techniques that enable Generative AI, especially in its text, image, and video varieties. One of the fields that interests me most personally is its use in gameplay matters, as a method to create more believable autonomous entities in complex playable contexts. In this case, I decided to create an AI capable of playing the classic Tic-Tac-Toe with different configurable difficulty levels.

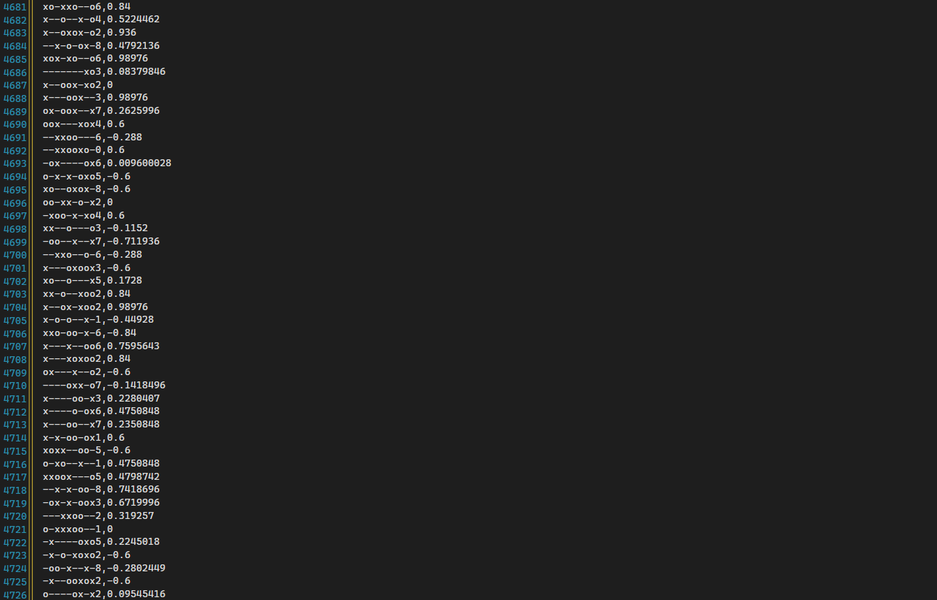

List of plays and their Q-values.

What is Q-Learning?

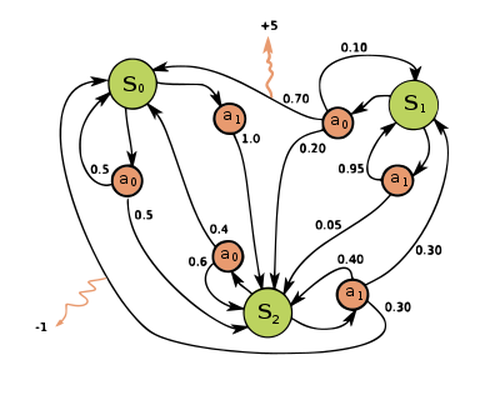

Q-Learning is a reinforcement learning technique that allows learning the optimal policy for a given finite Markov Decision Process, maximizing the value of a “reward” based on the state it takes.

Examples of Markov Decision Processes include Tic-Tac-Toe, Chess, or Checkers, as the game state contains all the relevant information to decide the next move, although it can also be applied to fields like agriculture or finance. The key advantage of using Q-Learning is that it is not necessary to explicitly know the specific process; it is enough to model the reward acquisition and the associated states.

This means it is not necessary to know the transition probabilities between states, but only to observe the resulting state and the reward obtained after each action.

Markov Decision Process.

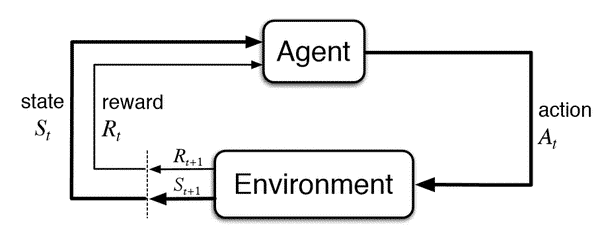

Reinforcement learning applied to video game AIs

Once we have the AI trained to make the most optimal decisions, it can be easily implemented to make “mistakes” percentage-wise based on the desired difficulty for the player. This gives us a highly configurable model to suit many difficulty levels, and even to modify it dynamically in a trivial manner.

Basic Q-Learning workflow diagram.

Demonstration of the trained model in Unity.